- Roko's Basilisk

- Posts

- Coding Test Showdown

Coding Test Showdown

Plus: Xbox outage, YouTube upscaling, and ElevenLabs’ commoditized audio prediction.

Here’s what’s on our plate today:

🧪 AI labs adopt new coding benchmark for real-world tests.

🎮 Xbox, Azure, 365 outage, AI audio shift, YouTube upscaling.

✅ 3 Weekend To-Dos: Test, debug, and evaluate agents.

🗳️ Are coding benchmarks a true signal of skill?

Let’s dive in. No floaties needed…

Visa costs are up. Growth can’t wait.

Now, new H-1B petitions come with a $100K price tag.

That’s pushing enterprises to rethink how they hire.

The H-1B Talent Crunch report explores how U.S. companies are turning to Latin America for elite tech talent—AI, data, and engineering pros ready to work in sync with your HQ.

Discover the future of hiring beyond borders.

*This is sponsored content

The Laboratory

Why are AI labs shifting to real-world coding benchmarks?

The current global economic structure rests upon the idea of technological innovations that enable a skilled, educated, and dynamic workforce to enhance productivity. From steam engines to AI, each wave of technology has redefined productivity. However, this time, the machines aren’t just enhancing workers; they are being positioned as a replacement.

With the internet and later the personal computer, the focus shifted from enhancing physical strength to brainpower. For decades, the decision to transition to a new technology was an easy one. However, with AI, it is not as simple as updating to the latest software or hardware. Now, the decision enterprises and workers face is an existential one. Should they believe claims made by AI labs about the dexterity of their models, or should they wait for the tools to be thoroughly vetted before they can be relied upon?

AI companies like OpenAI, Google, Anthropic, and Meta are positioning their tools as means to automate day-to-day workflows and enhance human abilities, and even replace them. Before any replacement can take place, companies will have to prove AI tools are capable of performing par, if not better, than human workers.

For AI companies, one of the biggest frontiers for proving their tools is coding.

According to a recent report from The Information, two of the most well-known names in the AI space, OpenAI and Anthropic, are turning to Cognition’s coding test. So far, companies have been relying on several publicly available as well as in-house benchmarks to assess the coding abilities of their large language models. The shift signals a deeper change in how AI companies are viewing the capabilities of their LLMs and how they plan to market them to businesses.

Why coding skills matter

Coding is the craft of writing precise instructions that make software run everything from mobile apps and databases to payment gateways and industrial control systems. In a way, code written by humans forms the lynchpin of modern economic activity, and without it, the technological world would come crashing down.

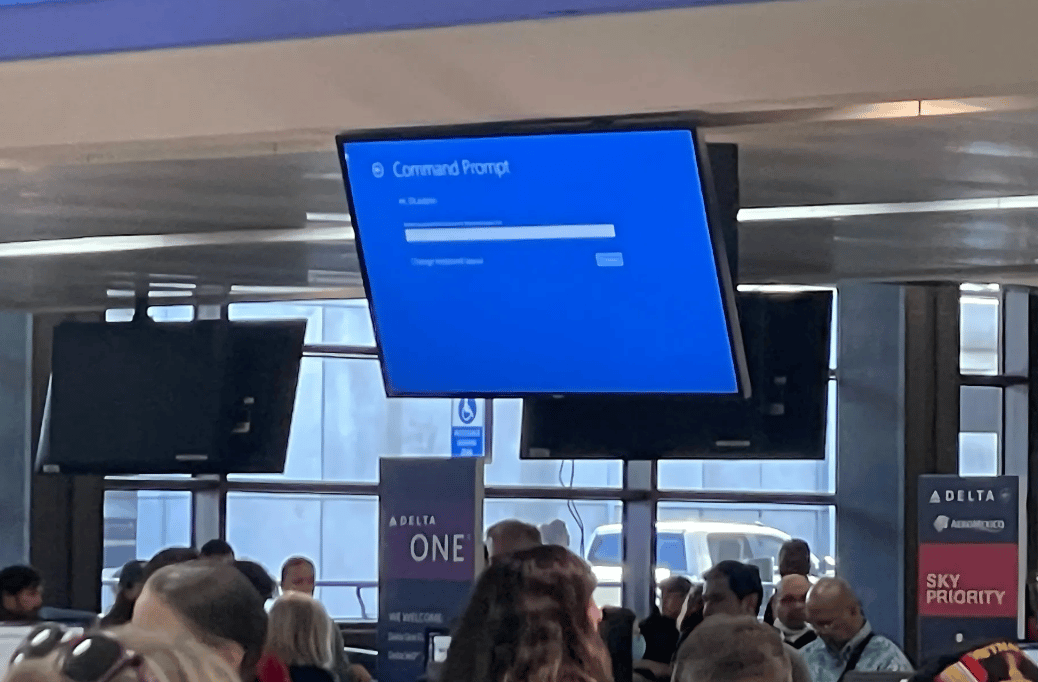

Even minor mistakes, bugs, can ruin the functionality of systems. As witnessed when a bug in CrowdStrike’s code affected Windows systems, bringing about one of the largest IT outages in history.

Realizing the importance of coders, the U.S. Bureau of Labor Statistics says that software development roles are projected to grow at around 15% between 2024 and 2034, much faster than the average for all other occupations.

With this backdrop, AI labs are looking to prove their models can do more than assist human coders and ship working software.

For labs, Microsoft’s early success in the world of coding is exemplary. The company’s GitHub Copilot, launched in 2021, reported over $500 million in revenue by 2024. As of 2025, it has around 20 million users. The tool is used by 90% of the Fortune 100, and its growth among enterprise customers has also grown by about 75% compared to the first quarter of 2025.

However, to prove the dexterity of the models, AI labs have to prove they can move beyond toy questions to agentic, end‑to‑end tasks in realistic environments and deliver bug-free software.

What is Cognition’s coding test?

Cognition is a U.S.-based AI company that develops advanced software engineer-style AI systems. One of the company’s key products is Devin, an AI software engineer capable of navigating codebases, finding bugs, writing patches, deploying apps, and collaborating with humans.

To evaluate Devin, the company developed a test called SWE-bench, an automated benchmark for software engineering systems. The test is different from typical coding challenges because it uses real issues taken from open-source Python projects hosted on GitHub. And each issue in the dataset comes with a failing unit test and a pull request that contains the correct fix. It, therefore, allows reviews to cross-check how an AI system approaches a problem and compare its results and functionality with human coders.

During the test, AI models are given the code repository and the problem description, and they must generate a patch that successfully resolves the issue so that all tests pass afterward. This setup provides a realistic measure of how well an AI can understand, reason about, and fix real-world software problems.

According to Cognition, tests like SWE-bench are essential for tracking progress toward AI that can genuinely assist or even lead software development projects.

Will shifting to Cognition’s test help AI labs?

Companies like OpenAI and Anthropic are turning to Cognition’s AI test because they need harder, more realistic evaluations to compare models, tune agent stacks, and make credible claims to enterprises buying coding tools.

According to The Information, both OpenAI and Anthropic are adopting Cognition’s coding test to benchmark model progress, a sign that third‑party, task‑grounded evaluations are becoming de facto yardsticks. This fits into the larger pattern where companies are leaning into cross‑lab evaluations.

Both companies recently announced they ran joint safety tests on each other’s models, and are shipping coding‑centric products: OpenAI’s reasoning models (o1) and coding workstreams, Anthropic’s Claude hybrid reasoning line, and Claude Code, aimed squarely at professional development workflows.

Google and Microsoft are on the same trajectory. Google has made Gemini Code Assist generally available and keeps adding agent mode and CLI features, while Microsoft continues to expand Copilot across the stack, tying model usage to the cloud.

The test then lends credibility to claims made by AI labs about the functional capabilities of their models when it comes to coding abilities.

How viable is the test?

For AI companies, the performance of their models within controlled environments may signal growth; however, it does little to convince customers in enterprises to invest in the tools. Cognition’s test as a signal of coding ability is a strong step up from static benchmarks because it measures whether an agent can plan, use tools, and deliver verifiably correct outputs in messy environments.

However, the test is not flawless. Not everything about Cognition’s test setup is publicly accessible. Certain parts of the evaluation system, such as a private dataset called cognition-golden, are proprietary. This makes it difficult for outside researchers to verify or reproduce the company’s results.

There’s also a chance that the model may have seen parts of the test data during training (known as data contamination), which could make its performance seem better than it really is. Even Cognition acknowledges that there are potential flaws in how such tests are set up and evaluated.

Another thing to note is that the supposed boost in coding productivity from AI tools like Devin doesn’t always translate into real workplaces.

Research shows that the effect of AI depends a lot on context. Experienced developers working on code they already know might actually slow down when using AI because they have to double-check or fix what the AI suggests. So, even if Cognition’s AI performs well on benchmarks, that doesn’t automatically mean it will save companies time or money unless it’s deployed carefully and used in the right way.

What is the right approach to deploying AI systems?

Technology has played a key role in improving productivity. Technological advancements and their impact have become a part of larger debates shaping policies, realigning social structures, and reimagining economic activity.

With AI, the debates have gotten more intense, and intensified conversations around machines replacing humans in the workforce. For many, the fear of losing their jobs to AI tools feels real; however, before any clear picture of the future emerges, AI companies will have to prove their models' abilities.

AI models will have to be evaluated outside of the controlled sandboxes and when solving real-world problems. This has not always gone well for tools. Even companies like OpenAI have struggled to combat problems like hallucinations and alignment.

As for enterprises, the Cognition test provides assurances that third-party assessments of the likelihood of the real capabilities of models shining through are higher. However, they will have to keep a close eye on model performance, since after all, a test, despite where and how it is conducted, is not the same as the real world, where one bug can shut down the global information system.

TL;DR

AI labs are shifting to real-world coding benchmarks like Cognition’s SWE-bench to prove their models can build functional software, not just answer toy problems.

Coding matters because it underpins global tech infrastructure, and even minor bugs (like CrowdStrike’s) can cause massive disruptions.

Cognition’s SWE-bench uses real GitHub issues to test AI agents on debugging and patching software, but concerns remain about transparency and dataset contamination.

Even strong benchmark performance doesn’t guarantee real-world productivity gains; deployment context, oversight, and reliability still make or break outcomes.

Headlines You Actually Need

AI audio = future commodity? ElevenLabs CEO says models will soon lose differentiation.

Microsoft outage hits Azure, Xbox, and 365: Global service disruption sparks cloud reliability concerns.

YouTube gets sharper: AI upscaling now improves low-resolution videos automatically.

Powered by the next-generation CRM

Connect your email, and you’ll instantly get a CRM with enriched customer insights and a platform that grows with your business.

With AI at the core, Attio lets you:

Prospect and route leads with research agents

Get real-time insights during customer calls

Build powerful automations for your complex workflows

*This is sponsored content

Weekend To-Do

Explore Cognition’s SWE-bench dataset on GitHub. Whether you code or not, it’s a useful way to see how real-world AI evaluation is evolving.

Install Continue—a free, open-source AI coding assistant that runs inside VS Code. Compare its suggestions with your usual workflow or just play with it on a side project.

And last… audit where AI tools help or harm: Pick one tool you use at work that includes AI, and document whether it actually saves time or creates more work. If it’s the latter, rethink or disable it.

Friday Poll

🗳️ Do you trust AI coding agents to ship production-level code? |

Rate This Edition

What did you think of today's email? |