- Roko's Basilisk

- Posts

- RAISE Act Redraws the Map

RAISE Act Redraws the Map

Plus: Lenovo’s Qira, Intel’s Articul8, and Grok’s deepfake fallout.

Here’s what’s on our plate today:

🧪 New York’s RAISE Act and America’s AI governance split.

🧠 Qira, Articul8, and Grok’s deepfake mess, in one scroll.

🛠️ Three hands-on ways to stress-test your AI governance.

🗳️ Poll: Who should set the toughest AI rules?

Let’s dive in. No floaties needed…

The context to prepare for tomorrow, today.

Memorandum distills the day’s most pressing tech stories into one concise, easy-to-digest bulletin, empowering you to make swift, informed decisions in a rapidly shifting landscape.

Whether it’s AI breakthroughs, new startup funding, or broader market disruptions, Memorandum gathers the crucial details you need. Stay current, save time, and enjoy expert insights delivered straight to your inbox.

Streamline your daily routine with the knowledge that helps you maintain a competitive edge.

*This is sponsored content

The Laboratory

Why New York’s RAISE Act signals a new phase in America’s AI governance battle

Generations of human endeavours have found success by adhering to the core principle of the rule of law. This ensures that ideas that feed the growth do not come at the expense of individuals’ rights. The principle, though not without flaws, has worked so far. And to make the rule of law work, laws have had to be updated, rethought, and even deleted as times and collective aims have changed.

In the technological space, laws are faced with a unique problem. How do you regulate spaces that continue to evolve at lightning speed without trampling innovation? And with artificial intelligence, the challenge has moved beyond simple control over the means of communication to regulating a technology that is poised to impact almost all aspects of human existence.

Since OpenAI opened access to AI models, regulators around the world have spent a lot of time and resources trying to understand how this technology can be regulated to blunt its impact on society.

Throughout 2025, regulators struggled to come up with the blueprints for AI regulations. And much like the EU, the rest of the world also struggled to implement the roadmap for regulations.

In the U.S., this roadmap is now at a crossroads. On one hand, the federal government under President Trump is looking to ensure that regulations do not hinder innovation. At the same time, the states are trying their best to protect the rights of their citizens amidst the AI race.

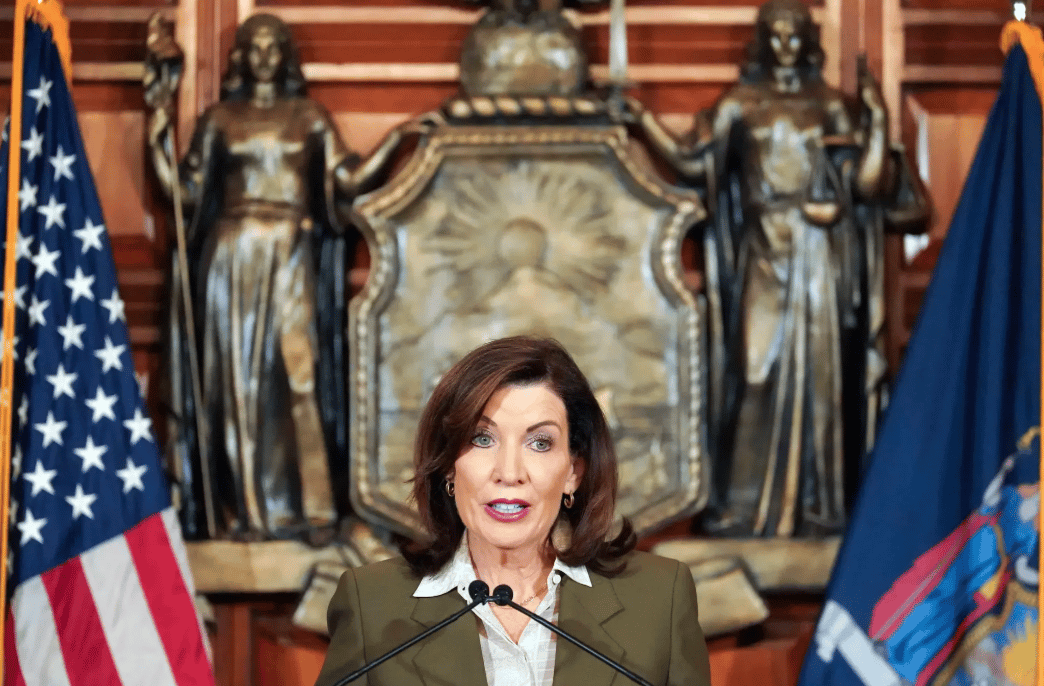

Recently, Governor Kathy Hochul signed New York's Responsible AI Safety and Education Act. The act is an effort by the state to serve as the de facto regulatory framework for frontier AI, while Washington remains gridlocked.

Inside the RAISE ACT

New York's Responsible AI Safety and Education (RAISE) Act requires large developers, those with more than $500 million in annual revenue who train AI models exceeding 10^26 computational operations, to publish safety protocols, report incidents to state authorities within 72 hours, and submit to oversight by a new office within the Department of Financial Services.

Violations carry penalties up to $1 million for first offenses and $3 million for subsequent ones. In practical terms, the act targets companies like OpenAI, Anthropic, Google, and Meta, and the handful of developers pushing the boundaries of what AI can do. For covered developers, the requirements are concrete.

They must publish detailed safety and security protocols explaining how they'll prevent "critical harm", defined as incidents causing 100 or more deaths or serious injuries, or at least $1 billion in damage. They must also report safety incidents to the state and face a new oversight office within the Department of Financial Services, funded by fees the office sets itself.

The penalties

The act is not a toothless effort, and the penalties matter: up to $1 million for first violations, $3 million for repeat offenses.

Additionally, there's no private right of action; only the Attorney General can enforce the law. This is in stark contrast to the earlier approach, where companies would make voluntary commitments, making the real financial stakes real and tangible.

The timing of the act is the biggest marker of a rift between the federal and state governments' approach to AI regulations.

The act comes against the backdrop of President Trump issuing an executive order directing federal agencies to challenge state AI laws deemed "onerous" or conflicting with a "minimally burdensome national standard."

The order's language is aggressive, characterizing state regulations as creating "a patchwork regulatory framework" that allows "the most restrictive states to dictate national AI policy." It directs the FTC to issue policy statements, the FCC to consider federal reporting standards that would preempt state rules, and the Commerce Department to identify "onerous" state laws for potential legal challenge.

Legal opinion is split

Legal experts are skeptical that Trump's executive order can deliver on its preemption ambitions. The fundamental problem is constitutional: executive orders don't override state law. Only federal legislation or successful court challenges can do that. And the legal theories available, dormant commerce clause violations, First Amendment compelled-speech claims, and implied preemption through agency action, face substantial hurdles.

Additionally, courts have historically given states wide latitude to regulate commerce within their borders, even when that regulation affects interstate businesses.

An example of this approach was the 2023 Supreme Court decision that upheld California's pork industry regulations despite their effects on out-of-state farmers, suggesting the dormant commerce clause arguments may not be as strong as the administration hopes.

This sets up a constitutional collision between state-level consumer protection authority and federal preemption claims that legal experts predict will generate prolonged litigation.

California came first

Another important thing to note is that New York’s regulations were not written on a blank slate. California's Transparency in Frontier Artificial Intelligence Act, signed by Governor Gavin Newsom in September 2025, took effect on January 1, 2026. The two laws share DNA, both use the same 10^26 FLOP threshold for frontier models, both require public safety frameworks, and both mandate incident reporting to state emergency services.

However, there are also differences. California gives developers 15 days to report safety incidents (except for imminent threats requiring a 24-hour notice). New York's 72-hour clock is significantly tighter. California caps penalties at $1 million per violation; New York escalates for repeat offenders. California uses existing state agencies for oversight; New York is building a dedicated office with rulemaking authority.

These variations create a compliance arithmetic that enterprise lawyers are already running. A company covered under both laws needs workflows that can satisfy New York's faster reporting timeline while maintaining California's documentation standards. The safest path, according to compliance advisors, is adopting "the highest common denominator as a baseline."

Compliance reality

The regulatory tussle unfolding in the U.S. is just one aspect of the challenge. While laws can be passed, their implementation can be a different ball game altogether, as was seen in the EU.

And the federal and state governments are yet to find common ground, the real challenge facing enterprises is how they should look at state laws that remain enforceable while waiting for the federal government’s intervention.

Even if the federal government does intervene, how long will it take for the litigation between the state and the federal government to conclude in the courts?

The answer emerging from legal advisors and compliance officers is surprisingly consistent: build for the strictest standard and apply it broadly. Gartner predicts enterprises will invest $5 billion in AI compliance efforts by 2027. Forrester expects 60% of Fortune 100 companies to appoint heads of AI governance in 2026.

The specific requirements cascade outward. Even companies that aren't frontier model developers will feel the effects through vendor due diligence. When an AI vendor is subject to the RAISE Act requirements, procurement and risk management processes will have to verify compliance. Even when a cloud provider runs infrastructure that trains covered models, contracts will need to have appropriate provisions.

For companies that might be covered, those training large models, fine-tuning frontier systems, or operating in adjacent spaces, the checklist is more demanding.

Compliance advisors recommend conducting coverage assessments to determine if current AI model training investments exceed New York's thresholds, mapping state-law exposure across all jurisdictions where the company operates, and refining incident response procedures to meet the 72-hour filing deadline.

However, while enterprises may be quick to make changes, the lack of clarity remains a challenge. Amidst this regulatory shift in the U.S., Colorado's AI Act takes effect June 30, 2026. It imposes the nation's first comprehensive duty of care for developers and deployers of high-risk AI systems, a much broader scope than frontier-model-only laws.

Illinois began enforcing amendments to its Human Rights Act covering AI in employment decisions on January 1, 2026. Texas's Responsible AI Governance Act also takes effect on January 1, 2026.

The patchwork is real, but it's not random. Common threads run through these laws: transparency requirements, incident reporting obligations, documentation mandates, and accountability mechanisms.

Companies building robust AI governance programs will find they're largely prepared for multiple jurisdictions, even if specific deadlines and definitions vary.

The regulatory floor

The RAISE Act takes effect January 1, 2027, giving covered companies a year to prepare. California's TFAIA is already live. The federal litigation that Trump's executive order promises will take years to resolve, if it produces clear answers at all.

For technology leaders, the strategic calculation is increasingly clear. State laws represent the regulatory floor that's actually enforceable. Federal preemption remains aspirational at best, legally uncertain at worst.

The RAISE Act does not settle America’s AI governance debate, but it shifts where that debate is being decided. With federal preemption legally uncertain and litigation likely to stretch for years, state laws are becoming the most immediate and enforceable rules facing AI developers.

For companies building advanced AI systems, the message is increasingly clear. A single national framework will not define the future of AI regulation in the United States, but by the strictest standards that hold up in practice. Whether this state-driven approach ultimately leads to a coherent national policy or locks in long-term fragmentation will shape not only how AI is developed, but also who ultimately benefits from it.

Quick Bits, No Fluff

Lenovo’s Qira bets on cross-device AI: new assistant will live on PCs and phones, mixing local and cloud models to act directly on your hardware.

Intel’s Articul8 chases enterprise AI cash: Intel’s spin-off is halfway to a $70M round at a $500M valuation for tailored genAI stacks.

UK minister slams Grok over deepfakes: Liz Kendall demands action after the platform serves AI-generated “undressed” images of women and girls.

Hire globally. Pay smarter.

Why limit your hiring to local talent? Athyna gives you access to top-tier LATAM professionals who bring tech, data, and product expertise to your team—at a fraction of U.S. costs.

Our AI-powered hiring process ensures quality matches, fast onboarding, and big savings. We handle recruitment, compliance, and HR logistics so you can focus on growth. Hire smarter, scale faster today.

*This is sponsored content

Thursday Poll

🗳️ How are you treating the RAISE Act–style laws in your AI planning? |

3 Things Worth Trying

Run a “RAISE-style” fire drill

Pick a real AI system you use, then simulate a critical incident: who detects it, who documents it, and can you file a regulator-ready report within 72 hours?

Adopt “strictest state wins” as policy

Sit down with Legal/Compliance and codify one simple rule: wherever state laws conflict, you default to the most demanding transparency, safety, and reporting standard.

Inventory your AI exposure like a supply chain

Map where models are trained, fine-tuned, and deployed (vendors included), then tag which pieces might cross California/New York/Colorado thresholds so you’re not surprised by coverage in 2026–2027.

Meme Of The Day

Rate This Edition

What did you think of today's email? |