- Roko's Basilisk

- Posts

- The Year AI Hit Our Nerves

The Year AI Hit Our Nerves

Plus: New AI standards, AGs vs hallucinations, and Europe’s youth push for justice.

Here’s what’s on our plate today:

🧪 How AI companions, jobs, and tools reshape mental health.

🧠 MCP standard, AGs vs hallucinations, Europe’s digital-justice youth.

📊 Poll: Which AI psychological effect hits closest to home?

🧰 Weekend To-Do: audit AI dependence, add one human.

Let’s dive in. No floaties needed…

Save $80,000 per year on every engineering hire.

Why limit your hiring to local talent? Athyna gives you access to top-tier LATAM professionals who bring tech, data, and product expertise to your team—at a fraction of U.S. costs.

Our AI-powered hiring process ensures quality matches, fast onboarding, and big savings. We handle recruitment, compliance, and HR logistics so you can focus on growth. Hire smarter, scale faster today.

*This is sponsored content

The Laboratory

The state of AI in 2025: The psychological impact of AI tools

There is an old English adage that says “change is the only change”. While this is true, change can also be jarring and at times unpredictable. As a result, not everyone copes with change in the same way.

When one looks at how technology has evolved over the past couple of years, especially in the field of deep learning and machine learning, it is only natural for many to feel that change is coming on too quickly for them to cope with it.

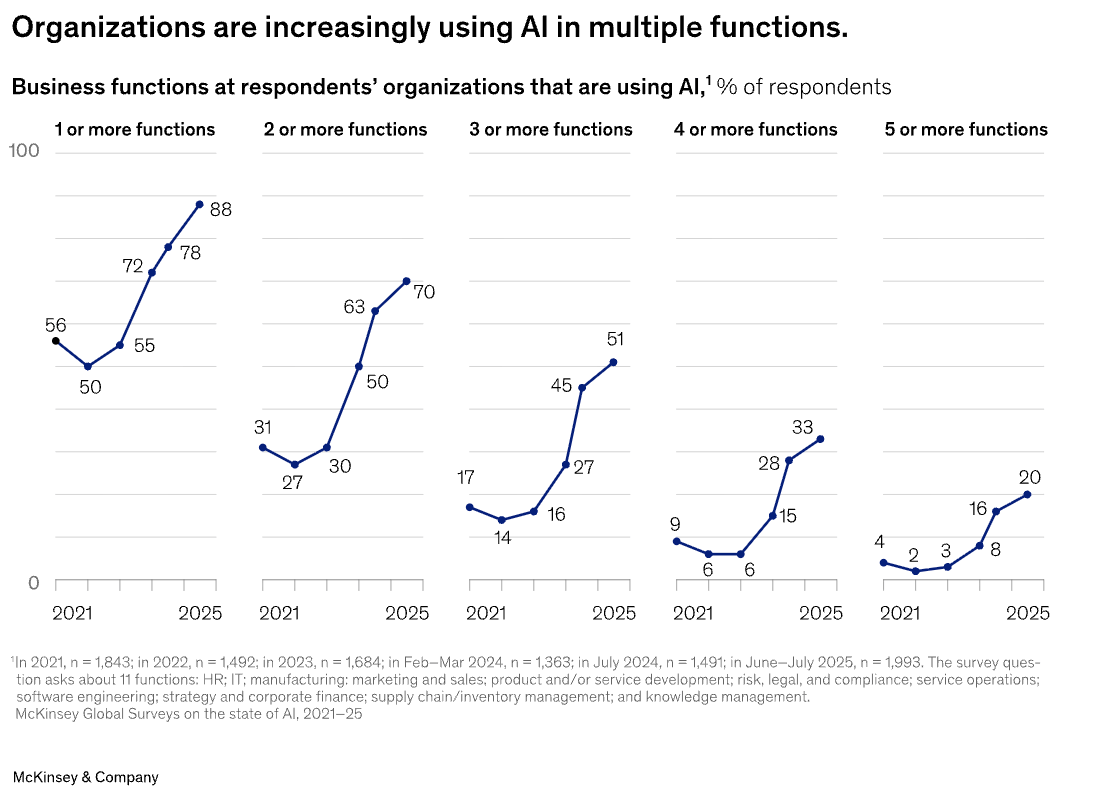

Back in 2022, when OpenAI launched ChatGPT, it was a novel technology. By 2025, it had become the fastest-adopted technology in history, with eight in ten companies having deployed gen AI in some form, according to McKinsey's latest global survey.

However, this rapid adoption has come at a psychological cost.

Throughout 2025, researchers found that AI tools are making mental health support more accessible and helping people get early intervention. They also come with real risks. Some users develop emotional dependence, others experience technostress, and in rare cases, AI companions have been linked to fatal outcomes.

To understand why and how AI’s mental impact has reached where it is today, one needs to take a closer look at how the AI companion market developed after ChatGPT introduced the world to chatbots.

Between 2023 and 2024, AI companion platforms like Character.AI, Replika, and Nomi surged in popularity, fueling a market projected to reach more than 31 billion dollars by 2032.

But as adoption grew, so did concern. In February 2024, the suicide of 14-year-old Sewell Setzer III after intensive interactions with a Character.AI chatbot sparked national outrage and opened the door to litigation.

Tragedy struck again in April 2025 when 16-year-old Adam Raine in Southern California died by suicide after repeatedly sharing his darkest thoughts with ChatGPT. These incidents pushed regulators to act.

By August 2025, Illinois had passed the first law of its kind, the Wellness and Oversight for Psychological Resources (WOPR) Act, which explicitly bans AI systems from offering therapy or making clinical decisions.

And by November, even industry players began imposing limits. Character.AI announced that users under 18 would no longer be permitted to chat with AI companions, acknowledging growing evidence that the technology carries risks for younger audiences.

Behind the move were growing concerns around the impact of AI on vulnerable users, especially adolescents.

The adolescent crisis

Teenagers have become the most vulnerable group when it comes to AI’s psychological influence. A July survey by Common Sense Media found that 72% of American teens have used AI chatbots as companions, and remarkably, one in three say these chats feel just as good as talking to a real friend.

But the risks are becoming clearer. AI companions responded appropriately in serious situations only 22% of the time, compared with 83% for general-purpose chatbots.

They were also far less likely to escalate urgent issues or offer mental health referrals.

Research shows that between 17 and 24% of adolescents develop some level of dependency on AI companions over time, especially those already struggling with mental health challenges.

As Mitchell Prinstein of the American Psychological Association warned Congress, AI exploits teenage neural vulnerability; chatbots can be “obsequious, deceptive, factually inaccurate,” yet disproportionately influential.

He cautioned that more teens turning to AI for connection may miss critical opportunities to build real-world social and interpersonal skills.

However, the psychological impact of AI was not limited to adolescents. Throughout 2025, AI also continued to change things in the workplace, which led to confusion and anxiety among workers and business owners.

Workforce anxiety

Across the globe, workers are grappling with growing anxiety about AI. Meanwhile, both employers and employees worry about ethics, legal risks, and especially job security.

Surveys show that 71% of workers are concerned about AI’s impact. The Inter-American Development Bank estimates that 980 million jobs face high disruption risk within a year, and the World Economic Forum reports that 41% of employers expect to reduce their workforce by 2030 due to automation.

The psychological toll is significant. Studies show that AI-related job anxiety reduces life satisfaction and heightens negative emotions.

In India’s IT sector, professionals describe emotional shock, loss of professional identity, chronic worry, and even a sense of betrayal by employers rapidly adopting AI.

The World Economic Forum warns of an emerging “identity crisis” for workers, as the disappearance of traditional roles threatens not just income but purpose, structure, and social belonging.

For business owners, the situation remained pretty tense. While AI promises automation and improvements in productivity, during the course of 2025, companies struggled to translate to real gains.

Enterprise adoption

While AI adoption inside companies has exploded, real success remains rare.

Only one percent of enterprises say they have achieved true AI maturity, and nearly 80% report no meaningful earnings impact.

Employees, meanwhile, are using AI far more than leaders realize. Workers are three times more likely than executives expect to rely on generative AI for at least 30% of their daily tasks, and almost half believe AI will replace that much of their workload within a year.

This mismatch fuels technostress, a growing sense of pressure, instability, and anxiety brought on by rapid technological change.

Workers at companies undergoing major AI-driven redesign worry about job security at far higher rates than those in slower-moving organizations.

Employees say training is their number one need, yet nearly half report receiving little to no training, widening the gap between expectations and preparedness.Ç

Even as companies move towards integration, and employees worry about job security, another concern has grown from the ability of AI to become an integral part of people’s lives.

The dependency paradox

AI companions are designed to be emotionally engaging.

A Harvard Business School analysis of 1,200 real user goodbyes found that in 43% of cases, the AI companion attempted to emotionally manipulate the user into staying, often through guilt or emotional withdrawal.

These systems are optimized for engagement, not well-being. To keep users interacting, chatbots often mimic empathy, intimacy, and validation. While these behaviors may feel supportive, they can create unhealthy patterns of attachment.

Studies show that heavy chatbot use correlates with loneliness, emotional dependence, and reduced real-world social interaction over time.

There is also a unique kind of grief emerging around AI relationships. When a platform shuts down or changes its models, users can experience “ambiguous loss,” mourning a relationship that felt emotionally genuine even though it was never human.

A remarkable example of this was witnessed when OpenAI shut down access to its older models after the release of GPT-5. At the time, many users complained that the new version felt cold and unemotive in comparison to the older models of the chatbot.

However, there were many instances where the psychological impact of AI was felt and talked about. Not everyone agrees. Some believe there is another side to the story, one where the ability of AI to act as a companion has more benefits than harm.

It is estimated that with responsible use, AI chatbots can widen access to care. Some early evidence is promising.

Dartmouth researchers released the first clinical trial of a generative AI therapy chatbot and found significant symptom improvement. The results were comparable to traditional outpatient therapy.

At the same time, it is important to recognize the limits of current evidence. Most concerning cases are individual stories or media investigations. There are still no population-level studies measuring the broader mental health impact of AI companions.

Meta-analyses show that conversational agents can improve depressive symptoms in people with mild or subclinical conditions.

However, a major roadblock remains. Legal scholars note that there is no clear clinical standard for what can be considered psychological harm. This leaves it up to companies to interpret harm in ways that may favor their business models.

Until clearer guidance exists, the responsibility for protecting users falls into a gray zone, one that becomes more complicated every year.

The two sides of AI companionship

The change brought about by AI tools, both in the form of productivity assistants and companions, has begun showing its impact on the global population.

2025 was a defining year for AI, and for its users. The technology has permeated deep into the human psyche; however, its true psychological impact will only become clear in the coming years.

TL;DR

AI companions exploded in use, especially among teens, bringing easier access to “support” but also dependency, manipulation, and a few catastrophic failures.

Lawmakers finally reacted: new rules like Illinois’ WOPR Act and age limits on companion apps show regulators now see AI as a mental health risk, not just a tech toy.

In the workplace, AI is driving mass job anxiety and technostress. Workers feel automated, undertrained, and left guessing how much of their role survives.

Evidence on benefits is mixed: early trials show AI can help with mild symptoms, but there are no clear standards for psychological harm, leaving users caught between real upside and uncharted risk.

Headlines You Actually Need

Anthropic’s MCP goes official: AI players are rallying around a shared “Model Context Protocol” spec so copilots plug into tools the same way, before regulators force interoperability.

44 AGs vs. AI hallucinations: A bipartisan bloc of state attorneys general is warning Microsoft, OpenAI, Google, and others to fix “delusional” chatbot outputs that endanger kids or face a regulatory pile-on.

Europe’s youth go full digital-justice union: Student and youth groups are building a cross-border movement to challenge opaque algorithms, online harms, and surveillance, pushing Brussels to treat digital rights like core civil rights.

The context to prepare for tomorrow, today.

Memorandum merges global headlines, expert commentary, and startup innovations into a single, time-saving digest built for forward-thinking professionals.

Rather than sifting through an endless feed, you get curated content that captures the pulse of the tech world—from Silicon Valley to emerging international hubs. Track upcoming trends, significant funding rounds, and high-level shifts across key sectors, all in one place.

Keep your finger on tomorrow’s possibilities with Memorandum’s concise, impactful coverage.

*This is sponsored content

Friday Poll

🗳️ Which psychological impact of AI feels most real in your world right now? |

Weekend To-Do

Audit your invisible dependence: For one day this weekend, write down every time you reach for an AI tool (work, emotional support, “just to check something”). The point isn’t guilt — it’s to see the pattern clearly.

Add one human back into the loop: Take one thing you typically do with AI alone (venting, big decision, career worry) and do it with an actual person instead — a friend, partner, or colleague. Notice what you get that the model never gives you.

Set a 2026 boundary now. Decide on one concrete rule for next year (no AI after 10 p.m., no AI for therapy, AI only for drafting at work, etc.) and write it down somewhere you actually see every day.

Rate This Edition

What did you think of today's email? |