- Roko's Basilisk

- Posts

- Inside 2025’s AI Rulebook Wars

Inside 2025’s AI Rulebook Wars

Plus: Meta chases margins, publishers test bot tolls, and Apple quietly makes leaving less painful.

Here’s what’s on our plate today:

🧪 How 2025 exposed clashing global AI rulebooks.

🧠 Bite-Sized Brains: Meta pivots, RSL launches, easier phone switching.

📊 Poll: Which regulatory path looks most dangerous long-term?

✏️ Prompt: Write your first principle for a global AI law.

Let’s dive in. No floaties needed…

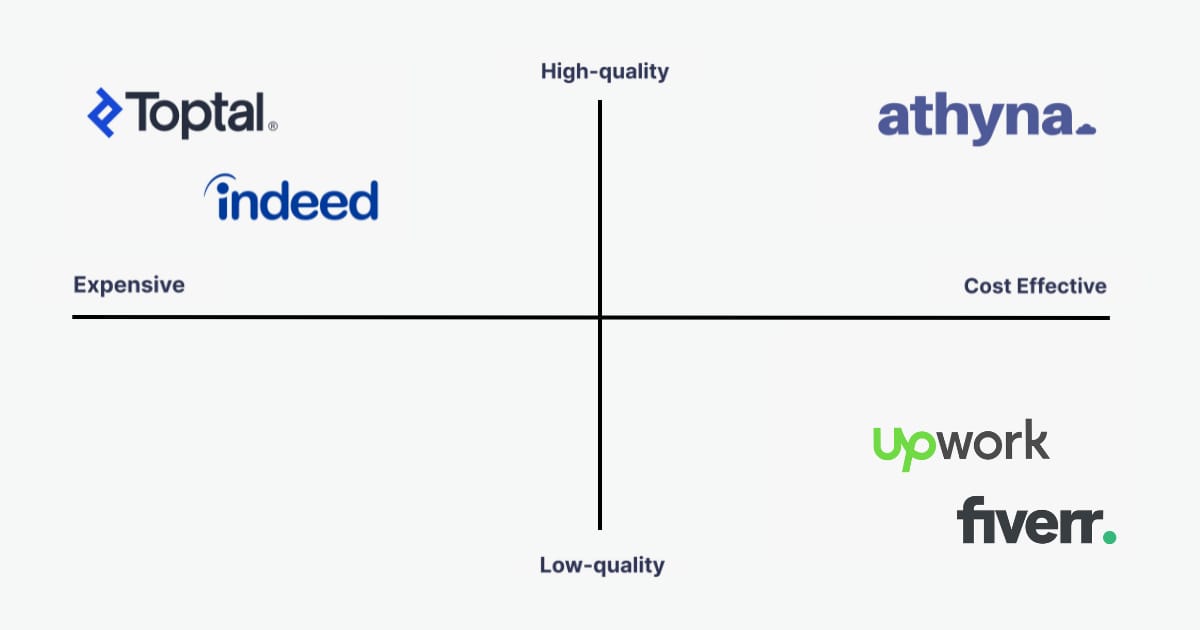

Save $80,000 per year on every engineering hire.

Why limit your hiring to local talent? Athyna gives you access to top-tier LATAM professionals who bring tech, data, and product expertise to your team—at a fraction of U.S. costs.

Our AI-powered hiring process ensures quality matches, fast onboarding, and big savings. We handle recruitment, compliance, and HR logistics so you can focus on growth. Hire smarter, scale faster today.

*This is sponsored content

The Laboratory

The state of AI in 2025: How the world tried to regulate AI

When social media first started gaining mainstream attention in the mid-2000s, not many understood its negative impact on its users. This resulted in unfettered access and development in the area, which eventually resulted in controversies like Cambridge Analytica.

Beyond controversies, social scientists also began warning of the unintended consequences of excessive social media use, including problems like body dysmorphia, the spread of misinformation and disinformation, and addiction.

All these forced regulators to step and even in 2025, regulators are trying to tame the beast. A clear example of this was seen when Australia banned social media for teenagers. With social media, regulatory intervention was slow and often came after the negative impact had already been felt.

However, throughout 2025, global powers struggled to understand how to deal with a far more powerful technology: one that has already started showing its impact on society, even before it has reached its full potential.

This struggle was not because people could not agree if AI needs to be regulated. Rather, it emerged due to concerns that strict and early regulations could hamstring the development of the technology.

In 2025, the world of AI witnessed the European Union struggling to implement rules, the United States racing to tear down guardrails to promote advancements, and China tightening state control. Amidst this chaos, the Global South struggled to make its voice heard.

Europe's ambitious gambit stumbles

The EU entered 2025 riding high. Its AI Act had become the world's first comprehensive AI legislation when it entered force in August 2024. By February 2025, the first provisions had kicked in, banning manipulative AI practices and establishing AI literacy requirements. For the world, the message was clear: Europe wanted to lead the world in responsible AI governance.

However, the regulations proved too much and too early for the sector and for the regulators to handle.

By mid-2025, most EU member states hadn't designated enforcement authorities by the August deadline, leaving the regulatory framework without infrastructure to implement it.

The lack of critical guidance documents kept, The General-Purpose AI Code of Practice, was finally released just weeks before the compliance deadlines kicked in.

The delay, coupled with strict guardrails, invited Industry pushback, and 56 European AI companies urged the Commission to pause parts of the Act, warning that compliance costs could stifle innovation.

By November, the Commission was considering delaying full implementation to 2027.

The Commission walked a careful line. It rejected calls for a full enforcement pause, declaring "there is no pause." Yet it proposed targeted delays through its "Digital Omnibus" package, pushing some requirements back while maintaining the Act's overall architecture.

The result? A regulatory framework that's simultaneously too strict for industry and too weak for proper enforcement.

The EU's penalties can reach €35 million or 7% of global annual turnover; however, these substantial figures mean nothing without the regulatory capacity to impose them.

While the EU was figuring out how to tackle the real-world impact of its AI regulations, the U.S. had taken a different approach.

America pushes for deregulation

While Europe struggled with implementation, the United States made a clear choice: get out of the way.

President Trump's approach was unambiguous from day one. On January 23, 2025, he signed an executive order removing barriers to American leadership in AI, which immediately revoked Biden-era AI safety requirements. Behind this dwelt the thinking that development and staying ahead in the AI race is a matter of national competitiveness, and regulation is the enemy of innovation.

By July, the administration released America's AI Action Plan, a 25-page document focused on "global AI dominance" through deregulation, infrastructure investment, and international competition. The plan explicitly directed agencies to "remove red tape and onerous regulation" while enhancing export controls on AI compute to rival nations.

The Trump administration didn't stop at federal deregulation. It went to war with states trying to fill the regulatory vacuum.

As states rushed to protect their residents, 38 states enacted approximately 100 AI-related measures in 2025, and the administration pushed back hard.

Trump attempted to slip a 10-year moratorium on state AI enforcement into the National Defense Authorization Act, though bipartisan opposition blocked it. The Senate voted to strip the moratorium, with nearly 40 state attorneys general signing an open letter against federal preemption.

However, leaders of the federal administration could not be deterred.

In December, Trump announced that he would sign an executive order establishing "one rule" for AI that would override state regulations.

The executive order would reportedly create an "AI Litigation Task Force" to challenge state AI laws in court, effectively using federal power to prevent states from protecting their residents.

Even Republican governors pushed back. Florida's Ron DeSantis called it "federal government overreach" that would "let technology companies run wild."

And though Reuters reported that the White House has put the order on hold, it shows the lengths to which the current administration is willing to go to help AI companies and ensure global competitiveness.

The result is regulatory chaos. What's legal in Texas might be banned in California. Companies face jurisdictional uncertainty, caught between states trying to protect consumers and a federal government trying to stop them.

China's approach to AI regulations: innovation under state control

While the leaders of the Western World were struggling to balance regulations with innovation, China took a third path. It embraced AI development while ensuring it serves state priorities.

The year started with DeepSeek-R1, a Chinese AI model that rivaled American capabilities at a fraction of the cost. When it launched in January, it surpassed ChatGPT on the Apple store, transforming China's self-perception from catch-up mode to renewed confidence.

But success came with strings attached. DeepSeek is subject to Chinese censorship requirements prohibiting content that could "damage the national image." On sensitive topics like Tiananmen Square, DeepSeek reportedly responded with: "Not sure how to approach this type of question yet."

China's regulatory framework prioritizes government oversight and ideological alignment. The country chose to opt for a framework that would force developers to let regulators test systems before deployment.

And with DeepSeek's success, oversight intensified; China is reportedly screening investors and asking staff to surrender passports. All while positioning itself as the global governance leader.

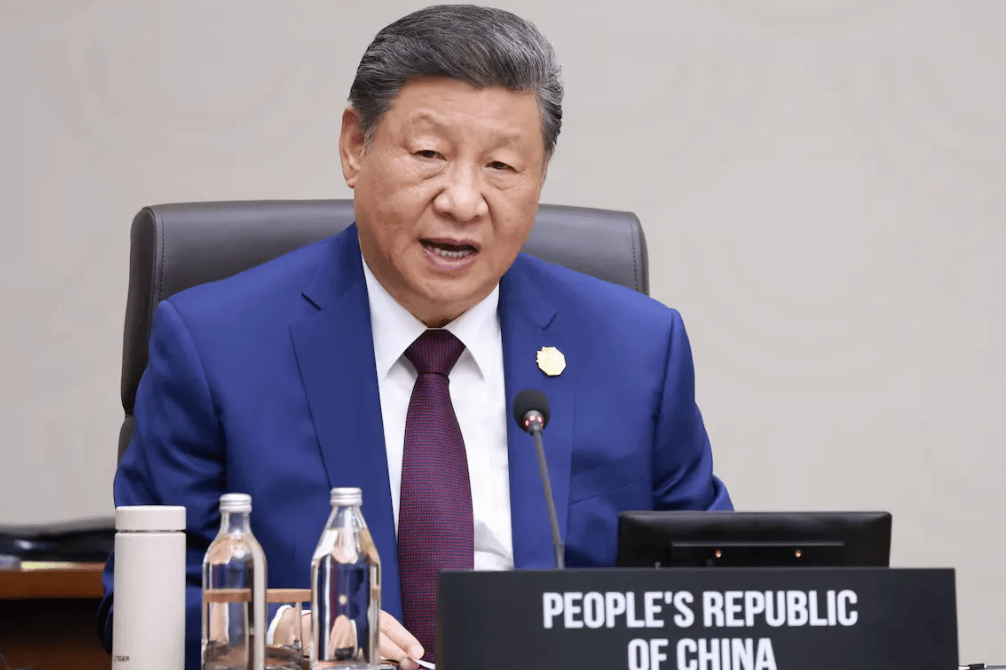

In December, President Xi proposed creating the World Artificial Intelligence Cooperation Organization, offering an alternative to Western-led frameworks.

Xi's proposed organization would coordinate AI development, governance, and technical standards to give countries shared access to AI resources. Chinese officials floated Shanghai as a potential headquarters, placing the group outside traditional Western power centers.

Xi framed the effort as a way to close the digital and AI divide in the Asia Pacific, saying the technology should benefit all nations and support human well-being.

Notably, U.S. President Donald Trump did not attend, giving Xi greater space to shape the narrative and position China as a champion of international cooperation.

Even so, one major proposal called on APEC nations to support the open flow of green technologies. This was significant because powering advanced AI systems will require massive amounts of clean energy infrastructure, from batteries to solar panels.

While the proposals sound ambitious and appealing, turning them into real-world action may prove far more difficult. Especially since the Global South appears even more conflicted when it comes to the idea of regulating AI than the other major powers.

The Global South fights for a voice

While powerful nations sparred over how to regulate artificial intelligence, developing countries faced a harsher truth: they had almost no voice in shaping the rules, yet they would shoulder many of the consequences. The imbalance was impossible to ignore.

The economic and human costs painted a stark picture. In Kenya, content moderators training AI systems earned as little as one dollar and fifty cents an hour to sift through some of the internet’s most disturbing material.

At the same time, the global expansion of AI data centers drove electricity use comparable to the entire nation of France. Much of the environmental strain, land use, water consumption, and carbon output fell on developing regions that lacked the resources to manage or resist it.

The economic payoff was equally uneven. While AI is projected to add $15.7 trillion to the global economy by 2030, most developing countries, with the exception of China, are expected to see only marginal benefits. The message was clear: the nations least responsible for shaping AI’s future risked being left out of its rewards.

Growing frustration sparked organization. On July 6, the BRICS group issued its Leaders’ Declaration on Global Governance of Artificial Intelligence, representing eleven countries and asserting that the United Nations, not Western-led blocs, should be the primary authority for global AI governance.

Their framework emphasized digital sovereignty, equitable access to AI systems, and development goals that rarely receive attention in European or American policy debates.

Individual nations also began crafting their own approaches. Indonesia released guidelines centered on transparency and accountability. Brazil applied AI rules to its financial sector.

India pushed forward digital inclusion strategies aimed at ensuring underserved communities were not left behind in an AI-powered economy.

Yet, these efforts confront immense obstacles. Many developing nations lack the technical infrastructure needed for large-scale AI adoption.

Regulatory bodies are understaffed and underfunded. And global discussions about AI safety, governance, and economic opportunity still tend to exclude the very countries that stand to be most affected.

The result is an emerging divide not only in AI capability, but in who gets to decide how the technology shapes the future.

The year AI regulation grew up

2025 was the year AI regulation moved from aspiration to implementation, and we discovered that implementation is much harder than aspiration.

The EU learned that writing comprehensive rules is easier than enforcing them. The U.S. chose deregulation over protection, sparking a federal-state showdown that will shape American governance for years.

China demonstrated that state control and innovation can coexist, at least on Beijing's terms. The Global South fought for representation in a system designed without them.

None of these approaches solved the fundamental challenge: how do you regulate a technology that's transforming faster than any regulatory process can accommodate?

The chaos that is the current regulations around AI harks back to the time when social media was changing human interactions. Today, much of the world is still looking for ways to handle the problems unleashed by the proliferation of a society that is always connected through online platforms, for better or worse.

When one looks at 2025, the answer that emerges for AI regulations is clear: there is no single, unified approach.

As the world heads towards increased automation, the question for users and for enterprises is how to manage the new normal. This is to navigate a more complex era of regulatory oversight. Add to this mix the confusion around IP rights and creative rights, and 2025 starts to look like the prequel to a struggle that is only just beginning.

Bite-Sized Brains

Meta’s open-source era wobbles: Meta is weighing charging for its next “Avocado” AI model, a shift away from the fully open Llama playbook toward more closed, monetized systems.

The web gets a paywall for bots: A new Really Simple Licensing (RSL) spec lets publishers tell AI crawlers “pay, limit use, or stay out,” while keeping normal search traffic intact.

iOS 26 makes defecting way easier: Apple and Google are rolling out native tools so users can hop between iPhone and Android with faster, more complete data transfers—under EU pressure via the DMA.

Explore the essential startup reading database.

We've diligently curated a list of top-notch articles, blogs, and readings that are pivotal for startup founders. These resources come from reputable platforms and are geared towards fueling your entrepreneurial journey.

And here's the kicker: we've organized it all in a user-friendly Notion database for easy access and tracking. Double win!

Learning from the best is how you become the best. As aspiring leaders and innovators, this curated list serves as a bridge to absorbing the knowledge that has propelled others to success.

*This is sponsored content

Prompt Of The Day

| In 3-4 sentences, draft the opening clause of your ideal AI law. Assume you’re writing for the whole planet: what’s the single non-negotiable principle (rights, safety, transparency, sovereignty, something else) you’d put first, and how would you phrase it so neither governments nor companies can easily wiggle around it? |

Tuesday Poll

🗳️ Looking at the main 2025 approaches, which path feels most dangerous long term? |

Rate This Edition

What did you think of today's email? |