- Roko's Basilisk

- Posts

- When AI Rules Fail

When AI Rules Fail

Plus: Headlines on Perplexity, Grok, Sublime, and weekend AI governance to-do.

Here’s what’s on our plate today:

🧪 AI policies are catching up to how people actually work.

📰 Headlines: NYT vs Perplexity, Grok creepiness, AI curation.

📊 Friday Poll: How real are workplace AI rules?

🧰 Weekend to-do: ship one small, real AI governance upgrade.

Let’s dive in. No floaties needed…

Hire smarter with Athyna and save up to 70%.

Why limit your hiring to local talent? Athyna gives you access to top-tier LATAM professionals who bring tech, data, and product expertise to your team—at a fraction of U.S. costs.

Our AI-powered hiring process ensures quality matches, fast onboarding, and big savings. We handle recruitment, compliance, and HR logistics so you can focus on growth. Hire smarter, scale faster today.

*This is sponsored content

The Laboratory

AI policies matter more than ever

Modern institutions are built on shared values and trust among like-minded individuals. However, despite finding common ground, individuals can never have shared ideas all the time. It is to address this very innate ability of individuals to want different things while being part of a larger cohort that institutions require an agreed-upon framework of laws and regulations.

These not only protect the rights of individuals but also help preserve the legitimacy and credibility of the institutions.

For decades, institutional organizations have relied on a set framework of such rules to protect themselves as well as their employees, to ensure that the pursuit of the goals of the one does not trample upon the rights of the many.

The rise of AI in the workplace

However, as AI tools become ubiquitous in the workplace, companies face a critical governance challenge: employees are using AI systems like ChatGPT, Claude, and Copilot with minimal oversight, potentially exposing organizations to data leaks, intellectual property risks, compliance violations, and reputational damage.

In response to this tidal shift, many companies are now having to rethink their policies for AI.

These policies are rulebooks that spell out how employees can and cannot use AI tools. With government regulations still unclear and constantly changing, these policies have become a crucial way for businesses to encourage innovation while still managing risks.

While they are the need of the hour, in the early days of the AI rollout, not everyone was receptive to the idea of drawing up specialized guidelines for what they assumed would be just another tool that employees could use.

How have company policies responded to AI

In 2023, companies like JPMorgan Chase and Samsung reacted to data leaks by banning tools like ChatGPT altogether.

However, by 2024, the approach had shifted. Instead of blocking AI, many organizations shifted to controlled use. Firms such as Goldman Sachs began rolling out their own internal AI tools and set clear rules for how employees could use them

Now in 2025, the focus has expanded to full-scale AI governance. Companies are under growing pressure from new regulations, including the EU AI Act and emerging state-level laws in the United States, pushing them to put stronger oversight and accountability measures in place.

Why companies need internal AI policies

For companies, the biggest need for internal AI policies stems from concern around data privacy. When employees paste sensitive information into public AI tools, that data can end up on outside servers or be used to train future models.

A Cyberhaven study from 2023 found that around 11% of workers had pasted confidential information into ChatGPT, including source code, financial details, and customer data.

Intellectual property is another challenge. AI-generated content raises questions about who owns the work and whether it could violate someone else’s copyright or patents. Organizations must decide if content produced with AI counts as company property and how to handle the risk of accidental infringement.

Compliance rules also complicate things. Fields like healthcare, finance, and law operate under strict data regulations such as HIPAA, GDPR, and SEC rules.

Deloitte’s 2024 report on AI governance notes that companies must make sure AI use fits within these existing requirements while preparing for new regulations that are on the way.

So, while companies need guidelines, the task is more complicated than it appears.

Why enforcement is so difficult

Enforcing AI policies is proving much harder than writing them. Monitoring how employees use these tools is technically challenging because most AI systems run through regular web browsers. Tracking that activity without crossing into invasive surveillance is difficult, and companies are still trying to find the right balance between oversight and employee privacy.

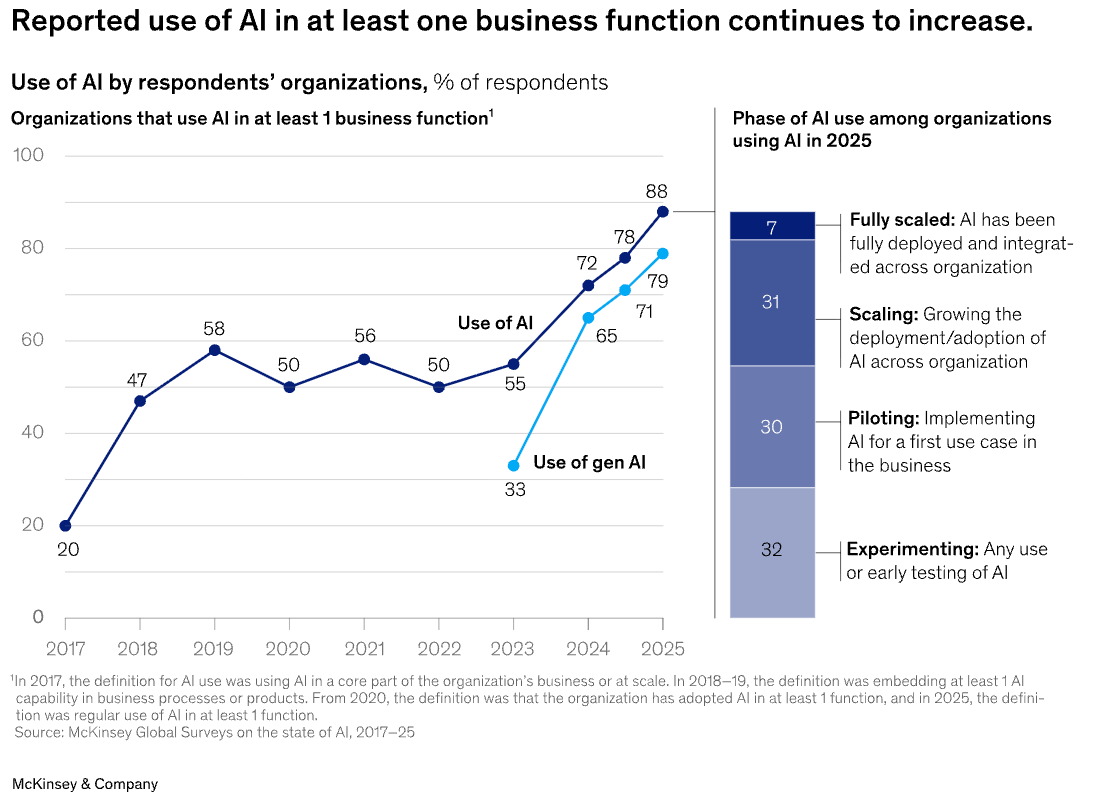

Training is another weak spot. McKinsey reports that while about 65% of companies now use generative AI in some form, 70% reported that they are struggling with data issues, including unclear data-governance processes, difficulty integrating data quickly into AI models, and not having enough high-quality training data.

All of these problems underline how critical data is for getting real value from AI.

This reflects a big disconnect between implementation and employee training on the use of AI tools. The problem is not easy to address due to the sheer speed at which the capability of AI tools is evolving.

AI capabilities are evolving so quickly that policies often fall out of date within months. Rules written for text-generating tools may not cover image generation, coding features, or the new wave of multimodal systems. As a result, companies are stuck in a constant cycle of trying to keep policies current with technology that keeps moving faster.

So, even when companies are trying to keep up with the shift, it is difficult to assess the reliability and viability of regulations.

Innovation vs. oversight

Some critics say strict AI rules can slow innovation and hurt a company’s competitive edge. A Harvard Business Review analysis notes that when governance is too heavy-handed, skilled employees may leave for companies with more flexible policies.

Others believe the risks of AI are exaggerated and that standard security practices, like access controls and data-loss tools, are enough without adding AI-specific restrictions.

To make matters even more confusing, different types of organizations approach this tension in different ways. Startups and tech-focused companies usually prefer more open policies with light guardrails, relying on employee judgment and prioritizing speed.

Meanwhile, large, traditional enterprises tend to take a more cautious approach with detailed approval steps, focusing on reducing risk rather than moving fast.

Privacy advocates are also worried about how companies enforce these rules. Monitoring tools meant to catch improper AI use might collect sensitive employee data or discourage legitimate experimentation. The Electronic Frontier Foundation has warned that AI governance should not become an excuse for increased workplace surveillance.

What future AI policies may look like

Corporate AI policies are entering a new phase. As global regulations tighten, many expect the EU AI Act’s risk-based framework to become the default standard. In the United States, fragmented rules may eventually merge into a single federal approach, especially if a major AI-related incident occurs.

Rising AI autonomy will push policies further. As AI agents start sending emails, booking meetings, and making purchases, companies must define who is responsible when an automated system makes a mistake.

Additionally, companies will have to look at creating new roles like AI compliance officers to maintain policies and train employees. Technical guardrails will help enforce rules automatically, although they risk being either too strict or too lenient.

However, regardless of how companies approach internal AI policies, the goal remains the same. To ensure aligned target setting where the needs, security, and rights of both employees and organizations can be maintained without losing out on either the AI race or the human touch.

TL;DR

Employees are using powerful AI tools with almost no guardrails, risking leaks, IP loss, and compliance violations.

Companies raced from banning public chatbots to rolling out internal tools, but enforcement, training, and data governance are badly lagging.

Policies age in months: text-only rules don’t cover multimodal, agents, or autonomous workflows, creating a constant governance catch-up game.

The next phase is risk-based, auditable AI use with clear accountability, where policies protect both innovation and people—not just the balance sheet.

Headlines You Actually Need

NYT goes after Perplexity—hard: The New York Times is suing Perplexity for allegedly scraping and reproducing millions of its articles, including paywalled content, without permission.

Grok is now a stalker’s sidekick: Futurism found Musk’s Grok chatbot handing out home addresses and detailed stalking-style instructions for everyday people with minimal prompting.

AI “taste” is the new power user skill: The Vergecast dives into Sublime, a platform betting that human curation plus AI, not pure automation, will define what’s worth your attention.

Powered by the next-generation CRM

Connect your email, and you’ll instantly get a CRM with enriched customer insights and a platform that grows with your business.

With AI at the core, Attio lets you:

Prospect and route leads with research agents

Get real-time insights during customer calls

Build powerful automations for your complex workflows

*This is sponsored content

Weekend To-Do

Write a one-page “AI use at work” cheat sheet: Translate your company’s messy AI stance into simple do/don’t rules: what data never goes into public models, which tools are approved, and who to ask when in doubt.

Run a mini AI data-risk audit: Pick one team or workflow, trace where data touches AI tools (prompts, uploads, logs), and mark the riskiest three points. Decide on one concrete mitigation for each (redaction, access limits, internal-only models).

Propose a lightweight AI review ritual: Draft a recurring 30-minute monthly “AI usage review” meeting: what’s working, what went wrong, and one policy or practice you’ll update next month so the rules keep pace with the tech.

Friday Poll

🗳️ Inside your company, which statement best describes your AI policy situation? |

Rate This Edition

What did you think of today's email? |