- Roko's Basilisk

- Posts

- Your Copilot Is Just Search

Your Copilot Is Just Search

Plus: Experiments to audit “copilots,” plus AI papers, FSD, and Wrapped.

Here’s what’s on our plate today:

🧠 Most “AI copilots” are just search in disguise.

📰 Quick Bits: AI paper slop, FSD texting, and 2025 Wrapped clones.

🧰 Three experiments to separate real agents from branded search.

📊 Poll: How many of your copilots actually automate work?

Let’s dive in. No floaties needed…

Clear communicators aren't lucky. They have a system.

Here's an uncomfortable truth: your readers give you about 26 seconds.

Smart Brevity is the methodology born in the Axios newsroom — rooted in deep respect for people's time and attention. It works just as well for internal comms, executive updates, and change management as it does for news.

We've bundled six free resources — checklists, workbooks, and more — so you can start applying it immediately.

The goal isn't shorter. It's clearer. And clearer gets results.

*This is sponsored content

The Laboratory

Why AI companies are falling behind on safety

When OpenAI introduced the world to the power of Large Language Models in 2022, not many understood how this nascent technology would upend the business world. Within a couple of years, LLM-powered AI models have gone from being a subject of research for tech enthusiasts to becoming the mainstay of news headlines.

During this process, many companies have jumped onto the bandwagon of AI, deploying tools that promise to revolutionize productivity through intelligent assistance. These tools, often branded as “AI copilots,” promise to streamline workflows and automate tasks within specific applications like Word, Excel, and Teams.

Today, the market is saturated with such tools; however, a significant portion of these products are sophisticated retrieval systems, essentially enhanced search interfaces with conversational wrappers, rather than true reasoning agents.

This gap between marketing and capability reflects both the technical limitations of current AI systems and a broader industry trend of overusing the "copilot" label to capitalize on generative AI hype.

Understanding this distinction matters because it shapes enterprise expectations, influences deployment strategies, and determines whether organizations realize genuine productivity gains or simply add another layer of complexity to their technology stack.

The copilot boom begins

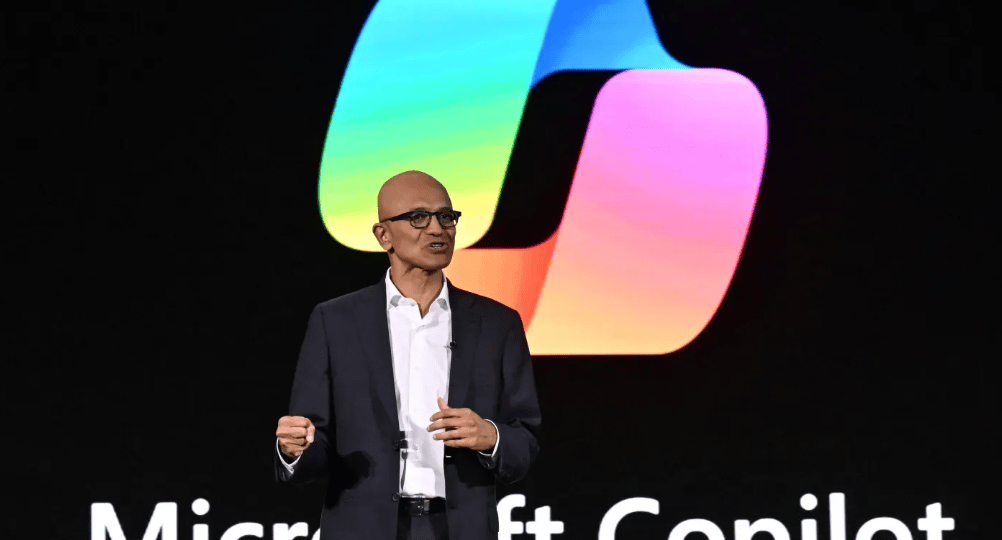

The term "copilot" entered mainstream technology vocabulary following Microsoft's launch of GitHub Copilot in 2021, which used OpenAI's Codex model to suggest code completions.

Microsoft subsequently expanded the brand to Microsoft 365 Copilot in March 2023, positioning AI assistants as collaborative partners embedded across workplace tools.

This naming convention triggered an industry-wide adoption spree. By late 2024, the Copilot label had been applied to hundreds of products across categories, from sales tools to design software to data analytics platforms.

Gartner estimates that AI agents and copilots would impact 80% of enterprise software by 2028, but analysts also noted significant variance in what vendors actually delivered under that branding. It is estimated that over 40% of agentic AI projects stand to be cancelled by the end of 2027 due to escalating costs, unclear business value or inadequate risk controls.

Marketing vs reality

The technical reality is more nuanced than marketing suggests.

Research from Anthropic and other AI labs distinguishes between retrieval-augmented generation (RAG) systems, which search and summarize existing information, and agentic systems that can reason, plan, and execute multi-step tasks.

Many products labeled "copilots" operate primarily in the former category, functioning as conversational interfaces to knowledge bases rather than autonomous decision-making assistants.

A December 2023 Stanford HAI study found that while foundation model capabilities improved dramatically, the gap between benchmark performance and real-world reliability remained substantial, particularly for tasks requiring extended reasoning or multi-step planning. However, this technical limitation hasn't prevented aggressive marketing positioning.

How to spot a real copilot

Amidst marketing that looks to capitalize on the rush to integrate AI in enterprise workflows, a clear distinction can be made between copilots and automation masquerading as assistants.

True AI copilots are very different from upgraded search tools. Real copilots can remember past interactions, carry out multi-step tasks on their own, and make sense of new situations. Research from MIT CSAIL notes that effective AI agents need planning, tool use, memory, and the ability to recover from mistakes. Most current “copilots” do not have these capabilities.

By contrast, many retrieval-based systems follow a simple pattern: the user asks a question, the system searches documents, the language model summarizes what it finds, and then it responds.

This still has value, but it is not the same as an agent that can analyze a problem, figure out what information it needs, pull data from several places, combine everything, and offer recommendations without being guided every step of the way.

This gap between marketing and capability is also showing up in customer feedback. The Information reported in late 2024 was that many businesses were disappointed that copilots required too much prompt engineering and gave uneven results.

Beyond the gap in performance, there are also business implications. Companies often buy copilots expecting real automation, but instead get a conversational search tool.

The enterprise disappointment gap

A new report from MIT’s NANDA initiative, The GenAI Divide: State of AI in Business 2025, shows that while generative AI still holds huge promise for companies, most efforts to turn it into fast revenue growth simply are not working.

Even though businesses are racing to plug the latest models into their operations, only about 5% of AI pilot projects actually lead to meaningful revenue jumps. Most stall out and fail to make a noticeable difference to the bottom line.

The findings come from 150 executive interviews, a survey of 350 employees, and a review of 300 public AI deployments. They highlight a widening gap between the few companies seeing real results and the many whose projects never get off the ground.

Pricing can become an issue as well. Some tools are sold at premium rates even though they mainly do retrieval and summarization, which are tasks that cheaper systems can handle.

The user experience suffers too. If a tool behaves like an enhanced search engine, it usually needs very specific queries and does not get better with use. A real copilot should learn from how people work and respond well to unclear requests.

Why user experience falls short

Nielsen Norman Group research has shown that conversational AI can actually make work harder if it does not provide a clear advantage over traditional interfaces.

The market now has three rough layers. At the top are copilots like GitHub Copilot and Anthropic’s Claude with extended context, which show real agent-like behavior in certain tasks.

In the middle are good-quality retrieval systems with chat interfaces that are honest about their limits. At the bottom are products using the Copilot label as marketing for what is essentially a search tool.

However, not everyone agrees with the idea that most co-pilots are mere automations hiding behind branding.

Some experts say it is unfair to dismiss retrieval-based copilots as “just search.”

Analysts like Benedict Evans argue that even simple conversational interfaces can meaningfully boost productivity by making information easier to access. Others note that “copilot” is only a metaphor to begin with.

As Ethan Mollick points out, the real issue is transparency: if vendors clearly explain what their tools can and cannot do, customers can judge the value for themselves.

Supporters of today’s copilots also argue that capabilities are evolving quickly. While many tools are mostly retrieval systems now, companies are already adding agentic features as models improve.

Updates like GPT 4’s expanded function calling and Anthropic’s tool use are seen as steps toward more autonomous systems. From this perspective, the “copilot” label reflects the direction of travel, not the current feature set.

Some researchers also say simplicity can be a strength.

A Harvard Business School study found that professionals often prefer reliable, auditable tools over opaque agents that make unpredictable decisions. In highly regulated fields, a trustworthy search system with clear sourcing may be more useful than an autonomous assistant that is harder to verify.

Where is the market headed?

Advancements in AI have allowed many companies to design and market tools promising an overhaul of workflows and productivity. However, amidst the chaos of AI deployment, it is important to understand whether a product fills a gap or is just trying to rebrand existing tools with AI slapped on the label.

The copilot market is shifting toward specialization instead of one-size-fits-all tools. Companies want clearer ways to measure what these systems can actually do.

As such, new benchmarks are becoming more important. Buyers also expect more transparency, and standards like the Model Context Protocol are making it easier to see how AI tools really work.

Meanwhile, vendors are moving away from broad copilots and focusing on niche tools for fields like law, medicine, and finance, which often deliver more value. Regulations such as the EU AI Act will push companies to back up their claims and be honest about limitations.

A bigger challenge remains unresolved. Current models still rely on pattern matching rather than true reasoning. Improving this may require entirely new architectures. As the market matures, the real question for enterprises becomes: “What can this tool actually do for our workflow?”

Quick Bits, No Fluff

AI research is drowning in “slop”: Top conferences are flooded with low-quality papers and overstretched, error-prone peer review.

Musk hints at “text while FSD drives”: The Verge reminds everyone it’s still illegal and unsafe in almost every state.

Spotify Wrapped sparks a recap clone army: Streaming platforms and brands are rolling out their own Wrapped-style year-in-review gimmicks.

Powered by the next-generation CRM

Connect your email, and you’ll instantly get a CRM with enriched customer insights and a platform that grows with your business.

With AI at the core, Attio lets you:

Prospect and route leads with research agents

Get real-time insights during customer calls

Build powerful automations for your complex workflows

*This is sponsored content

Thursday Poll

🗳️ Across the tools you use, how many so-called “AI copilots” feel like real agents rather than fancy search? |

3 Things Worth Trying

Run a “copilot or search?” audit: List every AI tool you use and classify each one: retrieval-only vs. true agent (planning, tools, memory). Kill or downgrade anything that’s just an expensive search.

Design one real agentic workflow. Pick a single task (e.g., monthly report, sales follow-up sequence) and map how an AI agent would own 80% of the steps: APIs, tools, and approvals before you buy another “copilot” license.

Rewrite vendor promises into testable outcomes: Take the marketing copy for one AI tool (“boosts productivity,” “automates workflows”) and convert it into 3 measurable KPIs you’ll track over 60–90 days. If it can’t be measured, it doesn’t ship as “critical.

Meme of the Day

Rate This Edition

What did you think of today's email? |