- Roko's Basilisk

- Posts

- Can AI Survive The Heat?

Can AI Survive The Heat?

Plus: Agent fatigue at Microsoft, ad-like ChatGPT recs, xAI’s Memphis megaproject.

Here’s what’s on our plate today:

🧪 When data centers overheat, AI’s entire stack wobbles.

🧠 Microsoft cools AI hype, ChatGPT shops, Musk builds Colossus.

💡 Roko’s Pro Tip on auditing your AI infrastructure dependencies.

🗳️ Poll: Is data center cooling AI’s biggest weak link?

Let’s dive in. No floaties needed…

Shoppers are adding to cart for the holidays

Over the next year, Roku predicts that 100% of the streaming audience will see ads. For growth marketers in 2026, CTV will remain an important “safe space” as AI creates widespread disruption in the search and social channels. Plus, easier access to self-serve CTV ad buying tools and targeting options will lead to a surge in locally-targeted streaming campaigns.

Read our guide to find out why growth marketers should make sure CTV is part of their 2026 media mix.

*This is sponsored content

The Laboratory

What the CME outage reveals about AI’s biggest weak link

In the early 1990s, the world was preparing for a technological shift that would reshape social, political, and economic systems. When the internet became publicly available, few imagined a future where computers more powerful than those that sent humans to the moon would become handheld devices or where machines could generate information rather than simply transmit it.

Yet none of these changes would have been possible without the smartphone. The devices made the internet accessible to millions and transformed how people interact with technology. If the internet is the linchpin of the modern world, the smartphone is the packaging that made it usable and widely adopted.

In 2025, another major technological shift is underway. With the advent of artificial intelligence, businesses are rethinking their workflows, end users are adapting to the paradigm shift where devices have multimodal capabilities, and even governments around the world are scrambling to regulate the use of advanced AI systems.

Why data centers matter

In this era, AI is the equivalent of the smartphone. It is the face of the technology that many interact with; however, behind the curtains, it is the data centers that make AI possible.

Recently, the public was reminded of the importance of this linchpin when the global futures market was disrupted. The cause: an outage in CME Group’s, the world's largest exchange operator data center.

The ‘Jesus nut’

The ‘Jesus nut’ found on certain helicopters is the single large nut that secures the main rotor to the mast, meaning the entire aircraft’s ability to fly depends on it staying in place. Pilots call it the Jesus nut because if it ever fails mid-flight, the only thing left to do is pray.

For AI systems, data centers are like the Jesus nut. A data center is a dedicated facility that houses the computer, networking, and storage infrastructure required to build, run, and deliver modern applications and services.

In the era of AI, data centers have become even more specialized. They provide the advanced hardware needed to train, deploy, and scale AI systems, including high-performance GPUs and TPUs, high bandwidth networking, massive storage systems, and robust cooling and power designs that support extremely intensive workloads.

AI models require fast parallel computation, rapid access to large datasets, and stable environments that operate at enormous scale. Data centers supply all of these capabilities.

Naturally, since data centers are such an integral part of the modern technological shift, countries around the world have invested heavily in ensuring that these linchpins continue to function at their optimal capacities. However, modern data centers have a big problem, one that is not easily fixed.

The CME outage

The question, how did the data center of the world's largest exchange operator suffer one of its longest outages, can be answered with one word: heat.

According to a Reuters report, CME said the outage was caused by a cooling system failure at data centers operated by CyrusOne.

The company noted that its Chicago-area site experienced problems that disrupted service for clients, including CME. As a result, trading halted on CME’s EBS platform for major currency pairs, along with key futures contracts tied to West Texas Intermediate crude, the Nasdaq 100, the Nikkei, palm oil, and gold.

The problem faced by the CME is not a new one. It is estimated that AI workloads generate 5 to 10 times more heat than traditional computing, pushing power densities from the historic standard of 5-8 kilowatts per rack to 60-120 kilowatts or higher.

Since most data centers rely on traditional air cooling systems, they are now scrambling to shift to liquid cooling technologies that remain expensive, complex, and largely untested at scale.

Additionally, with data center electricity consumption projected to more than double to 945 terawatt-hours by 2030, and cooling accounting for up to 40% of that energy use, the industry faces a dual crisis: keeping servers cool while managing explosive growth in both energy demand and water consumption.

The outage at the CME Group’s data center is reflective of this rapid change and the challenge it poses.

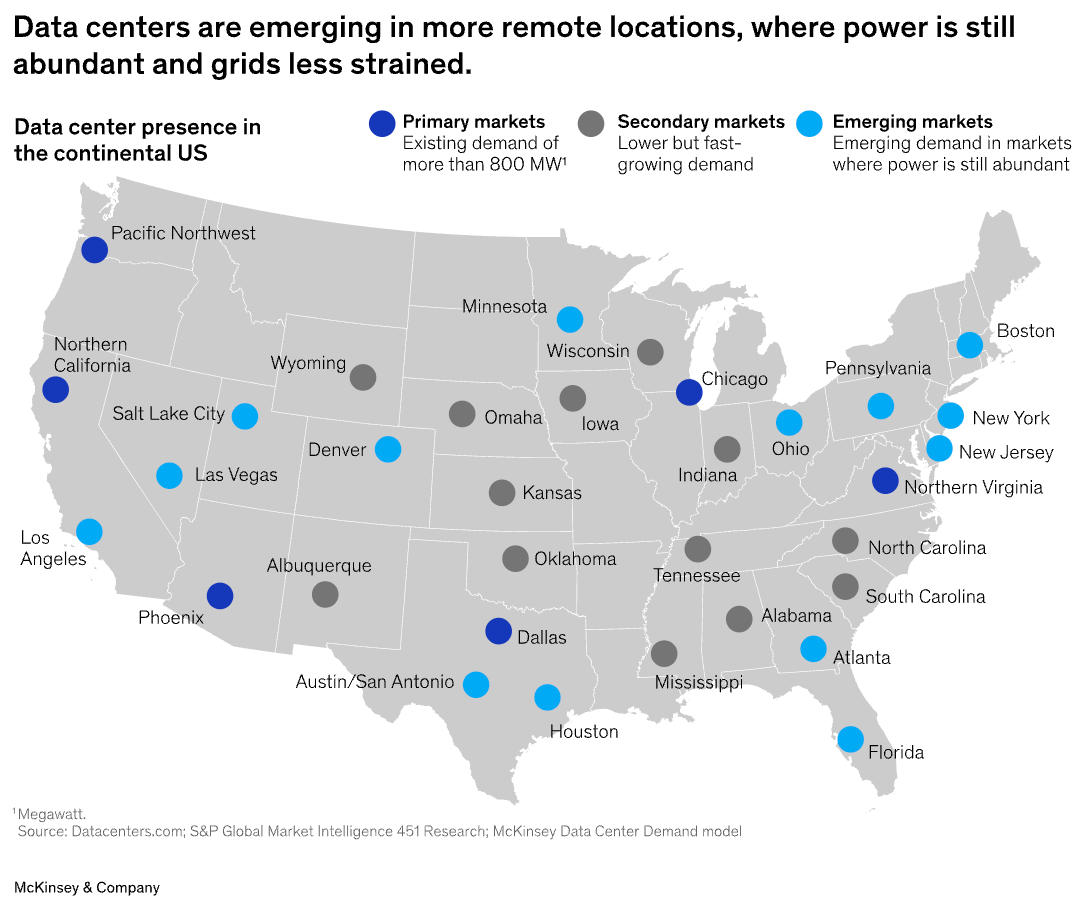

This is not just a technical hurdle; it is a fundamental problem, the solution to which is deciding where data centers can be built, how utilities plan infrastructure, and whether AI's growth can be sustained without straining local power grids and water supplies.

The search for solutions

As with most problems, the solution to data center overheating can be found in technological advancement.

The physics are simple to understand. Air cooling can no longer keep up with the heat produced by dense AI systems. So, data centers need to shift to water based solution as they absorb heat far more effectively.

This has made liquid cooling essential for the industry.

One approach is direct to chip cooling, where liquid flows through plates on top of hot components such as CPUs and GPUs. This removes most server heat and leaves only a small amount for air systems to handle, although some air cooling is still required for smaller parts.

Another approach is immersion cooling, which places entire servers in tanks of nonconductive fluid. The servers can operate safely while submerged, and the method captures almost all waste heat and reduces energy use. However, it requires major building changes and reinforced floors to support heavy tanks.

A third option is rear door heat exchangers, which add cooling panels to the back of racks. Hot air passes through the panels, and water or refrigerant absorbs the heat. This is easier to retrofit than immersion cooling but is less effective than direct-to-chip systems.

These technologies are already being deployed to address data center heating challenges.

A new report from Dell Oro Group, a well-regarded source for data on telecom, security, networking, and data center markets, says the liquid cooling sector has reached a turning point. The firm expects liquid cooling to move into mainstream use starting in the second half of 2024 and to create a market worth more than 15 billion dollars over five years.

Nvidia also influenced the market when it announced that the next generation of its Blackwell chips will use liquid cooling by default. Since Nvidia is the leading producer of AI chips, this decision signaled that liquid cooling is becoming the standard.

However, despite the technological advances, adoption remains a challenge.

The challenges to liquid cooling

The biggest issue is cost. Liquid cooling requires special equipment such as tanks, pumps, heat exchangers, and a lot of plumbing. Some companies say their immersion systems are now close in price to air cooling, but the upfront cost can still run from hundreds of thousands to several million dollars, depending on the size of the project.

Another problem is that most data centers are not built for the amount of power that liquid-cooled systems allow. Fewer than 5% of data centers can handle even 50 kilowatts per rack, and liquid cooling often supports more than 100 kilowatts. Updating older buildings is a major project.

Then there is the problem of thermal management, which cannot be separated from the larger power crisis. Data centers are bumping into hard limits on electricity availability. Over $64 billion worth of U.S. and Canadian data center projects have been delayed or canceled since 2023 due to grid constraints, according to industry tracking.

And though data centers are increasingly being sited in power-abundant regions, many have had to resort to installing on-site natural gas generators or even small nuclear reactors to bypass grid constraints.

Liquid cooling also poses the question of where the water will be sourced. While direct water use gets attention, the full picture is more complex. Data centers consume water in three ways: direct on-site cooling, indirect consumption through electricity generation (thermoelectric plants use substantial water), and embedded water in supply chains (chip manufacturing is extremely water-intensive).

It is estimated that on-site cooling can evaporate 0.26 to 2.4 gallons per kilowatt-hour of server energy, depending on climate and system design. Though hyperscalers like Microsoft are now turning to AI to solve this problem.

The future of AI’s linchpin

The current global economy has bet big on AI, making drastic changes in how humans live and operate. So much so that there are talks that investors may have overdone it. Regardless of whether this is true or not, one thing is clear: AI needs data centers, which in turn need to stay cool to ensure more people can take advantage of the technology.

Liquid cooling may be a solution, but that too needs to be refined to ensure it can support the increasing cooling needs of data centers without disrupting local ecosystems.

The CME outage showed how one point of failure can shake the entire system. In the AI era, data centers are that point. Protecting the Jesus nut of AI will decide how far the technology can scale.

Roko Pro Tip

| 💡 If you’re betting on AI, start treating ‘where it runs’ as seriously as ‘what it does’. |

Launch fast and design beautifully with Framer.

First impressions matter. With Framer, early-stage founders can launch a beautiful, production-ready site in hours. No dev team, no hassle. Join hundreds of YC-backed startups that launched here and never looked back.

One year free: Save $360 with a full year of Framer Pro, free for early-stage startups.

No code, no delays: Launch a polished site in hours, not weeks, without hiring developers.

Built to grow: Scale your site from MVP to full product with CMS, analytics, and AI localization.

Join YC-backed founders: Hundreds of top startups are already building on Framer.

Eligibility: Pre-seed and seed-stage startups, new to Framer.

*This is sponsored content

Bite-Sized Brains

Microsoft’s AI comedown: Microsoft has reportedly cut its AI sales growth targets as customers hesitate to pay for agents that still feel unproven in the real world.

ChatGPT suggestions or just ads? OpenAI is testing a suggestion shop inside ChatGPT that surfaces shopping ideas in responses, insisting they’re not ads, even as they look like them.

Musk’s mega-AI in Memphis: xAI’s planned Colossus supercomputer in Memphis’ Boxtown neighborhood is triggering local pushback over what a massive AI facility means for residents and infrastructure.

Monday Poll

🗳️ What worries you most about AI’s physical Jesus nut—the data center? |

Meme Of The Day

Rate This Edition

What did you think of today's email? |