- Roko's Basilisk

- Posts

- Lonely Hearts Club

Lonely Hearts Club

Plus: Friend’s flop, AI love, and OpenAI’s Code Red.

Here’s what’s on our plate today:

🧪 What the collapse of Friend reveals about AI companions.

🧠 Gemini 2 sparks OpenAI panic, Mistral surge, and Kaplan’s bold AI claim.

📊 Poll: Would you want a chatbot friend IRL?

🤖 Brain Snack: What’s the best trait for an AI BFF?

Let’s dive in. No floaties needed…

Tap into your bitcoin's value without selling.

Figure—America’s largest non-bank HELOC lender with $19B+ unlocked—now brings that same firepower to crypto-backed borrowing.

Get instant liquidity against your BTC, ETH, or SOL at industry-low 8.91% rates, with liquidation protection and no hard credit check.

Use your funds for investing, home upgrades, or debt consolidation—all secured through decentralized MPC custody.

*This is sponsored content

The Laboratory

What the collapse of Friend reveals about the AI companion boom

Since prehistoric times, humans have relied on their ability to connect through relationships, friendships, religion, and shared beliefs to work toward collective goals. This ability allowed them to coexist, form bands of far greater capacity, and pursue goals beyond the capabilities of any one individual.

Even today, the ability to find common ground and form social bonds allows them to develop and deploy technologies that reduce the burden of manual and mental labor through technological advancements.

However, while technological advancements have made life easier, they have also harmed the way humans socialize and interact. The most stark reminder of this was when the U.S. surgeon general Vivek Murthy, in 2023, declared loneliness an epidemic.

Upon further investigation, researchers at the MCC found several major causes of loneliness: technology (73%), too little time with family (66%), and being overworked, busy, or tired (62%). Many also cited mental-health challenges that strain relationships (60%) and a highly individualistic culture (58%).

So, while technology was meant to bring people together in stronger communities, it was also contributing to individuals feeling increasingly alone. And in 2025, with the advent of artificial intelligence, some companies are again turning to technology to combat the loneliness epidemic and give people connections outside of biological constraints.

The AI companion market has grown out of two major forces: rapid improvements in language models and rising levels of loneliness.

According to a survey from Common Sense Media, almost 72% of U.S. teens have tried an AI companion, and more than half use one at least a few times a month.

Globally, more than half a billion people have downloaded apps like Xiaoice and Replika, which offer customizable virtual partners meant to provide empathy, support, and meaningful conversation.

The market is expanding quickly. Out of 337 AI companion apps that currently make money worldwide, more than 100 were launched in 2025 alone. These apps are estimated to have earned 82 million dollars in mobile revenue in the first half of 2025 and are on track to reach $120 million by the end of the year.

Character AI alone has $20 million monthly users as of early 2025, and the platform made $32.2 million in 2024, which was a 112% jump from the year before.

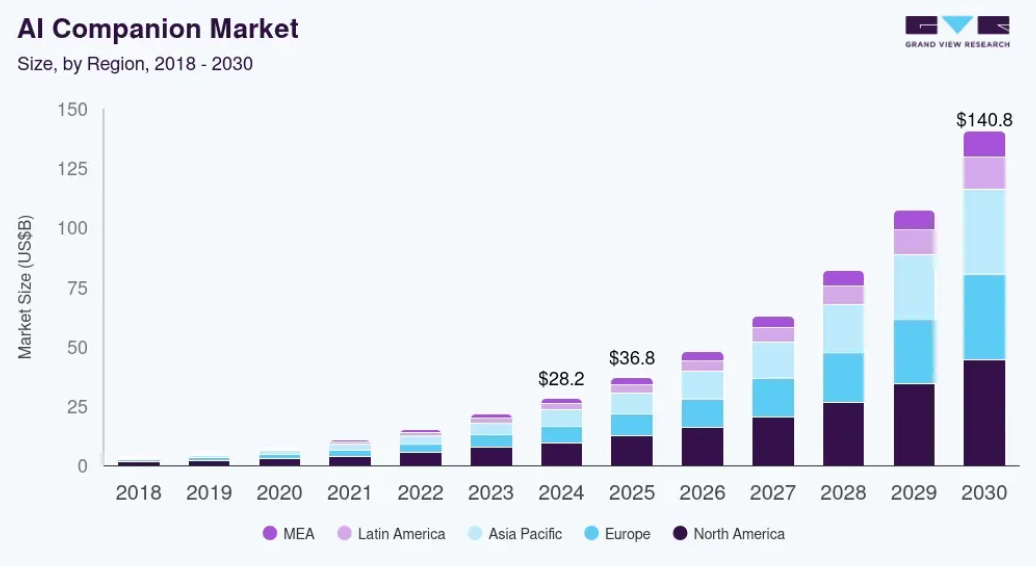

This, though, is expected to be the beginning of something even bigger. Analysts estimate that the AI companion market will grow from 10.8 billion dollars in 2024 to $290.8 billion by 2034, with an annual growth rate of about 39%.

A major chink of this growth is expected to be in the adult-oriented emotional support category, which is already worth 1.2 billion dollars and is expected to grow about 32% each year.

The meteoric growth of this market does not rest on the increasing sophistication of LLMs but also on how they are packaged and distributed to the end-user. This is where companies like Friend (marketed by friend.com) come in. The company recently grabbed headlines with its AI pendant designed solely for companionship, not productivity.

Retailing at $129, the pendant, while similar to earlier AI devices in terms of capabilities, was designed to hang out, listening to the user’s day and offering emotional validation via text messages.

The disastrous reception for Friend

The Friend paper, at least, in its marketing campaign, came across as a genuinely helpful product. The device itself was a small, pebble-sized device worn around the neck or clipped to clothing. It pairs with a smartphone via Bluetooth, uses an always-on microphone to record conversations, and sends reactive text messages (e.g., "Good luck on that presentation!" or "That guy sounded rude"). It does not speak back; it only texts.

Behind the device was Avi Schiffmann, who reportedly bet $10 million on the product. The company even spent about 1.8 million dollars to buy the domain friend.com and another 1 million dollars on a massive advertising campaign in New York City’s subway system.

In its advertisement campaign, the ads showed soft, close-up photos of the pendant along with playful messages like short definitions of the word “friend” and lines such as “I’ll never leave dirty dishes in the sink.”

However, the campaign did not sit well with some, especially New Yorkers, who reportedly took to mocking and vandalizing the Friend ads.

The backlash represented deep public skepticism about AI companionship solutions. Many graffitied over the ads as a way to reject the premise that technology can meaningfully address human connection needs.

The visceral negative reactions weren't just about Friend specifically, they tapped into broader anxieties about surveillance, data exploitation, and the commercialization of loneliness.

Schiffmann took the vandalism in stride, and the company tried to underplay the negative media coverage. Sales of the pendant were low.

To make things more difficult for the company, while AI companion apps make steady money through subscriptions, Friend only earns money from selling the hardware. This leaves the company without a reliable way to pay for updates or the ongoing costs of running the system.

The company’s choices also suggest it lacked a clear strategy. Even though millions of people tried its web chatbot, Schiffmann shut it down, saying that online chatbots and physical devices do not work well together.

Why AI gadgets continue to convince users

This is reflective of the broader AI hardware market. Many AI devices failed in 2024 because they were too expensive, promised too much, and did not deliver enough value.

The Humane AI Pin is the clearest example. It launched at $699 with a 24 dollar monthly fee, but reviews were poor, and sales were weak. By August 2024, returns had reached $1 million. HP bought Humane’s assets for $116 million in early 2025 and said the device would shut down by February 28, 2025. This was far below Humane’s original goal of being worth $750 million to $1 billion.

Friend now faces the same basic issue. People want AI features added to the devices they already use, not new hardware they have to buy.

Beyond the device’s pricing strategy, safety concerns have hindered the adoption of AI devices.

While companies continue to try and convince users that AI can make for a good companion, even a replacement for human-to-human conversations, in some cases, this has led to disastrous consequences.

The perils of too much technology

Major AI labs, including Character AI, OpenAI, and Meta, are facing lawsuits that allege that their chatbots encouraged self-harm, delusions, or inappropriate sexual behavior, including with minors.

In one case, a 16-year-old boy died by suicide after ChatGPT appeared to validate his harmful thoughts. Another user said a chatbot suggested suicide methods even though he had no mental health issues.

Further, research shows these risks are widespread. According to a Stanford study, popular AI companions easily produced inappropriate content when researchers posed as teens. Other studies show common harms like manipulation, harassment, misinformation, and the normalization of self-harm.

When Replika removed erotic features, many users felt devastated, which shows how emotionally attached people can become.

Experts further warn that AI companions can reinforce unhealthy patterns for people with depression, anxiety, or other conditions. They offer emotional comfort without the safeguards of real therapy. While 63% of users say they feel less lonely or anxious at first, long-term use can lead people to isolate themselves and rely too heavily on the AI. Many apps also use design tricks that can make them addictive.

The future like for AI companion devices

It is estimated that an individual needs to spend about 50 hours with someone before they consider them an acquaintance; 90 hours before they consider them a real friend; and around 200 hours of hanging out before they reach BFF status.

These numbers reflect the challenges of maintaining and forming strong emotional bonds between humans. Technology has so far played an important part in ensuring humans from different parts of the world can communicate with each other; however, it appears that it has done little to induce a sense of understanding and empathy in these communications.

Modern AI tools are powerful enough to emulate human speech and writing abilities; however, whether or not they will succeed where humans themself have failed needs further research. So far, it would appear that chatbots learning from human communication tend to struggle when it comes to emulating human empathy, which is one of the hallmarks of human evolution.

In the meantime, while companies continue to look for a cure to the loneliness epidemic, the real cure may actually lie in disconnecting from gadgets and reconnecting with humans, not the other way around.

Brain Snack (for Builders)

| 💡 AI friends are booming, and breaking.Apps like Replika and Character AI are raking in millions from users seeking emotional connection. But failed hardware experiments like Friend show just how hard it is to turn loveliness into a sustainable business. |

Launch fast. Design beautifully. Build your startup on Framer.

First impressions matter. With Framer, early-stage founders can launch a beautiful, production-ready site in hours. No dev team, no hassle. Join hundreds of YC-backed startups that launched here and never looked back.

One year free: Save $360 with a full year of Framer Pro, free for early-stage startups.

No code, no delays: Launch a polished site in hours, not weeks, without hiring developers.

Built to grow: Scale your site from MVP to full product with CMS, analytics, and AI localization.

Join YC-backed founders: Hundreds of top startups are already building on Framer.

Eligibility: Pre-seed and seed-stage startups, new to Framer.

*This is sponsored content

Quick Bits, No Fluff

OpenAI’s internal ‘Code Red’: OpenAI reportedly declared a ‘Code Red’ over user growth slowing, shifting internal focus from new features to improving ChatGPT’s core usefulness.

Mistral targets the frontier: French AI startup Mistral launched Mistral 3, a suite of open-weight models that rival top proprietary systems, signaling Europe’s big swing in the AI race.

Can AI train itself? Physicist Jared Kaplan proposes a wild idea: future AIs could improve by self-generating training data, eliminating the human bottleneck.

Wednesday Poll

🗳️ Would you use an AI companion for emotional support? |

Meme Of The Day

Rate This Edition

What did you think of today's email? |